How to code AI? This question has become increasingly important for anyone interested in artificial intelligence and machine learning.

Whether you're a beginner looking to explore AI or an experienced developer aiming to improve your skills, understanding how to build AI systems is essential.

AI has transformed industries, from healthcare and finance to entertainment and marketing, making it a crucial area of expertise for developers.

Many organizations now analyze what are five marketing strategies that retailers spend half of their annual budget on? to understand how AI-driven automation and data analytics influence business decisions.

The process starts with defining the problem and collecting the right data. Once the data is preprocessed, choosing the right model is key whether it’s a traditional machine learning model or a more complex deep learning architecture.

Training the model, evaluating its performance, and fine-tuning the hyperparameters come next, ensuring that the AI can make accurate predictions or decisions.

Additionally, once the model is trained, deploying it for real-world use and continuously monitoring its performance is essential.

Ethical considerations also play a critical role, ensuring AI is developed responsibly.

In this blog, we will explore and understand the steps and concepts that are vital for anyone aiming to code AI.

The Fundamentals of AI: Essential Concepts

At its core, AI refers to systems designed to perform tasks that traditionally require human intelligence, such as understanding language, recognizing images, or making informed predictions.

Understanding AI vs machine learning helps clarify how AI encompasses broader goals of intelligence, while ML focuses on pattern recognition and learning from data.

The most common AI systems in use today rely on the following technologies:

- Machine Learning (ML): Algorithms that learn and improve from data over time, eliminating the need for explicit programming.

- Deep Learning: A specialized subset of ML, utilizing neural networks to detect intricate patterns and structures within data.

- Natural Language Processing (NLP) and Natural Language Generation (NLG): Technologies powering virtual assistants, chatbots, and large language models (LLMs) for language understanding and generation.

These technologies form the backbone of modern generative AI. To gain a deeper understanding of the various types of AI, including narrow, general, and superintelligent AI, check out our guide on the advantages and challenges of AI.

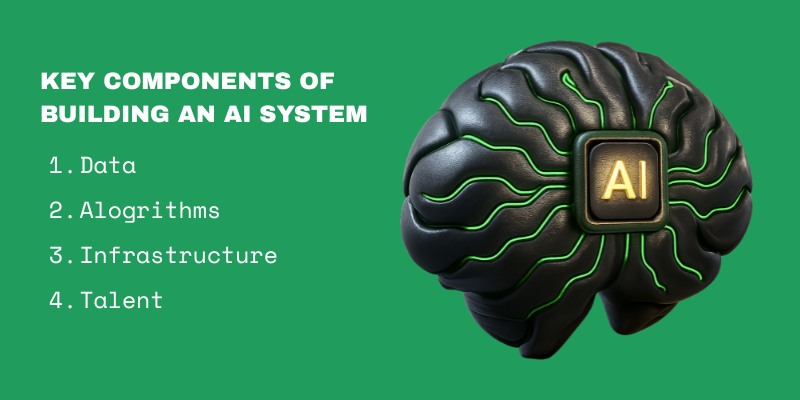

4 Key Components for Building an AI System

To create a successful AI system, you’ll require four essential elements.

- Data: The backbone of any AI model, requiring high-quality, well-labeled data for training and validation.

- Algorithms: Models capable of learning from the data, such as neural networks, decision trees, and transformers.

- Infrastructure: Adequate computational resources, often cloud-based, to train the models and deploy them for real-world use.

- Talent: A team of engineers, data scientists, and subject-matter experts to design, build, and assess the AI system.

AI development is a collaborative, multidisciplinary process, demanding cooperation between product, engineering, and research teams. In many organizations, this collaboration overlaps with software development services, ensuring seamless integration between model design and deployment pipelines.

Explore Our Digital Transformation Services!

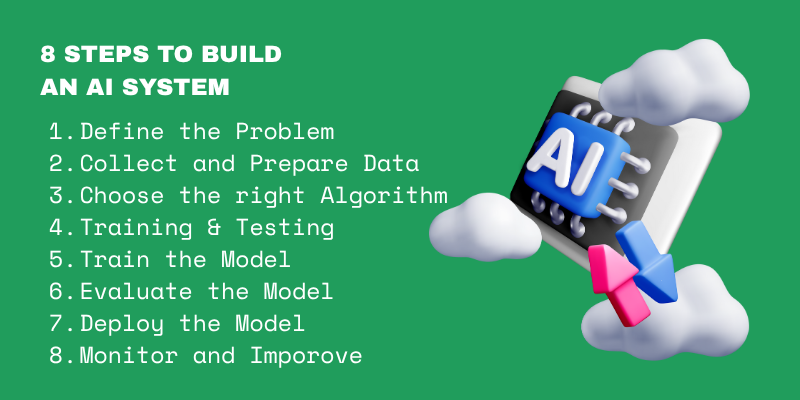

Step-by-Step Guide to Building an AI System

Building an AI system involves a series of steps, from understanding the problem to deploying the final model.

Here’s a structured approach to guide you through the process:

- Define the Problem

- Collect and Prepare Data

- Choose the Right Algorithm

- Split Data for Training and Testing

- Train the Model

- Evaluate the Model

- Deploy the Model

- Monitor and Improve

Step 1: Pick a Problem and Define Success

When building an AI system, the first step is to select a problem to solve.

The problem could fall under various categories such as classification, where the goal is to categorize data into predefined classes, regression for predicting continuous values, natural language processing (NLP) for working with text data, or computer vision for tasks related to images and videos.

Once the problem is defined, it's important to establish measurable success metrics. These metrics help in evaluating the effectiveness of the AI model.

For classification tasks, common metrics include accuracy, precision, recall, and F1-score. In regression, mean squared error (MSE) and R² are used to measure the model’s performance.

NLP tasks often use metrics like BLEU score and ROUGE, while computer vision tasks may focus on intersection over union (IoU) and mean average precision (mAP).

Additionally, there are several constraints to consider during development. These can include time limitations for model training, data quality and availability, computational resources required for training, and the interpretability of the model, especially in mission-critical applications.

Step 2: Collect and Prepare Data

Collecting and preparing data is a critical step in building an AI system. The first phase involves gathering high-quality, relevant data that aligns with the problem you're solving.

This data needs to be properly labeled if it’s for supervised learning tasks. For example, in image classification, each image should be tagged with its corresponding label, like "cat" or "dog."

Once the data is collected, the next step is to clean, split, and transform it. Cleaning involves removing noise, correcting errors, and handling missing values. After cleaning, the data is split into training, validation, and test sets to ensure the model is evaluated on unseen data.

Normalization is then applied to scale the data, ensuring features like age or income are in comparable ranges, preventing certain features from dominating the model’s learning process.

Data augmentation is often used, especially in image and text-based tasks, to artificially increase the diversity of your dataset by applying random transformations, like rotations or flips for images.

Feature engineering is also essential, where you create new features from existing data to improve the model’s performance. These steps ensure that the data is ready for training and that the AI system can learn effectively from it.

Explore Our Web Design & Development Services!

Step 3: Choose the Right Alogrithm

How to code AI? The first most important step is to choosing the right algorithm. The algorithm you select should be based on the problem you're solving and the type of data you're working with.

For simpler tasks, classic machine learning (ML) algorithms might be sufficient. Linear regression works well for predicting continuous values, while logistic regression is ideal for binary classification problems.

Decision trees offer interpretability, and gradient boosting algorithms like XGBoost are powerful for handling complex datasets.

For more sophisticated AI tasks, neural networks are often the best option. Multi-layer Perceptrons (MLPs) are used for simpler deep learning problems, whereas Convolutional Neural Networks (CNNs) are excellent for image recognition and computer vision tasks.

When working with sequential data, such as text or time-series, Recurrent Neural Networks (RNNs) and Transformers are highly effective, with Transformers being widely used in natural language processing (NLP) for tasks like translation or text generation.

Key considerations when choosing an algorithm include:

- Problem complexity (simple vs. complex)

- Data type (sequential, image, tabular)

- Interpretability and performance requirements

Step 4: Split Data for Training and Testing

Splitting data into training and testing sets is crucial or ensuring your AI model generalizes well. Typically, the data is divided into training, validation, and testing sets.

The training set is used to teach the model, the validation set is used to tune hyperparameters, and the testing set is reserved for evaluating the model's final performance.

When training a model, key components like loss functions and optimizers play a significant role.

- Loss Functions: Measure the discrepancy between predicted and actual values. Examples include Mean Squared Error (MSE) for regression and Cross-Entropy Loss for classification tasks.

- Optimizers: Adjust model weights to minimize loss. Common optimizers include Stochastic Gradient Descent (SGD) and Adam.

In addition to these, hyperparameters such as learning rate, batch size, epochs, and regularization techniques significantly influence the model’s training process.

These hyperparameters need to be set thoughtfully to achieve optimal model performance.

Finally, ensuring reproducibility in experiments is critical for consistent and reliable results. This involves setting random seeds, using config files for managing hyperparameters, and employing experiment tracking tools to monitor and compare performance across different model runs.

Hyperparameters used:

- Leaning rate

- Batch size

- Epochs

Reproducibility Involve

- Random Seed

- Config Files

- Experiment and Tracking

These parameters include:

- Learning Rate: Controls how much the model’s weights are adjusted during each training step.

- Batch Size: Defines the number of samples processed before the model updates its weights.

- Epochs: Specifies how many times the entire dataset is passed through the model during training.

- Regularization: Methods like L2 regularization or dropout help prevent overfitting.

- Seeds: Set random seeds to ensure consistency in initialization and results.

- Config Files: Use configuration files to manage hyperparameters and training settings.

- Experiment Tracking: Tools like MLflow and Weights & Biases (W&B) help track, monitor, and compare model performance.

Step 5: Train The Model

Training a model involves feeding it data and adjusting its parameters to minimize errors and improve its predictions.

This is a key phase in AI development, where the model learns to identify patterns in the data. Tools like Keras, PyTorch Lightning, and TensorFlow help manage the entire training process, making it easier to build, train, and fine-tune models.

To train an AI model effectively, you’ll need the right resources and setup.

The process requires:

- GPU/TPU compute: High-performance hardware is essential for speeding up the training process, especially for large datasets and deep learning models.

- Clean train/validation/test split: Ensuring your data is properly split into training, validation, and testing sets is crucial for evaluating model performance and avoiding data leakage.

- Monitoring for overfitting or underfitting: It's important to regularly monitor the model for signs of overfitting or underfitting, which can lead to poor generalization on new data.

For code-focused AI models, human feedback is vital to ensure quality results and prevent issues like hallucinations.

Partnering with an AI Automation Agency can help streamline annotation, feedback loops, and training workflows for scalable model improvements.

Code-first human annotation services become particularly useful when you need to scale the evaluation process efficiently and improve the model’s overall performance.

Step 6: Evaluate and iterate the Model

Evaluating and iterating on the model is essential to ensure it performs effectively and generalizes well to new, unseen data.

During this phase, you assess the model’s accuracy, fine-tune it, and identify areas for improvement.

To evaluate the model, several metrics are commonly used:

- Accuracy: Measures the overall correctness of the model's predictions.

- F1 Score: Provides a balance between precision and recall, especially useful for imbalanced datasets.

- ROC-AUC: Measures the model’s ability to distinguish between classes, especially useful for classification tasks.

- RMSE (Root Mean Squared Error): Commonly used for regression problems, measuring the average magnitude of errors.

- BLEU Score: Used in NLP tasks, particularly for evaluating the quality of text generation, such as machine translation.

Step 7: Deploy The Model

Once the model is trained and evaluated, the next crucial step is to deploy it for real-world use. Deployment involves making the model accessible via APIs or other services, ensuring it can handle incoming data and provide predictions or decisions efficiently. In many enterprise settings, this stage plays a central role in Digital transformation, where organizations partner with experts such as Centric to integrate AI models into scalable, production-ready systems.

For exporting and serving the model, several methods are commonly used:

- FastAPI/Flask Endpoints: These frameworks allow you to expose your model as a web service, making it accessible through HTTP requests. FastAPI is known for its speed and ease of use, while Flask is widely used and has good integration with Python-based models.

- ONNX (Open Neural Network Exchange): A cross-platform framework that allows you to export your model and run it on various platforms, making it easier to integrate with other tools and systems.

- Docker Images: Packaging the model in a Docker container ensures that the environment is consistent across different systems, simplifying deployment to cloud servers or on-premises environments.

Step 8: Monitor and Improve

After deploying the model, continuous monitoring and improvement are necessary to ensure it remains effective and up-to-date.

Monitoring the model’s performance, detecting any issues, and making adjustments when needed is a critical part of its lifecycle.

CI/CD for models is essential to automate the testing, building, and deployment process for continuous updates and improvements

Effective monitoring helps track the model’s performance and identifies when it’s underperforming or needs retraining

Monitoring of model requires:

- Test

- Linting

- Auto-build

Model Metrics Used:

- Drift

- Latency

- Cost

- RetrainingTrigerrs

These Model performance monitoring and metrics are discussed below:

- Tests: Automated tests ensure that the model's code remains reliable and works as expected, even after changes or updates.

- Linting: Linting checks the code for style errors, potential issues, and best practices, ensuring that the codebase stays clean and maintainable.

- Auto-Builds: CI/CD pipelines can automatically build the model whenever there’s an update, saving time and reducing errors in the process.

- Drift: Monitors if the model's predictions deviate from expected performance over time due to changes in the underlying data.

- Latency: Tracks the time it takes for the model to respond to requests, ensuring that response times remain fast and efficient.

- Cost: Keeps an eye on resource consumption, ensuring that the model's performance doesn't lead to excessive operational costs.

- Retraining Triggers: Monitors for performance dips or significant changes in the data that may require retraining the model to maintain its accuracy and reliability.

Four Common Pitfalls and Fixes

Several common pitfalls can affect the performance of AI models, including:

- Data Leakage: Occurs when information from outside the training set is used, leading to over-optimistic results. Prevent by separating training, validation, and test data.

- Overfitting: The model learns noise from the data, leading to poor generalization. Avoid by using regularization, cross-validation, and simpler models.

- Unstable Training: The model fails to converge due to large weight updates. Fix by adjusting the learning rate or using adaptive optimizers like Adam.

- Bad Labels: Incorrect labels can lead to poor model performance. Ensure accurate, consistent, and representative labeling.

Human Expertise: Essential for Every Stage of AI Development

AI systems rely heavily on human input at every stage, from data preparation to post-training evaluation.

As AI models become increasingly complex especially those generating code or making high-stakes decisions the demand for specialized reviewers, engineers, and evaluators grows.

To meet this demand, more teams are turning to code-focused human data services, which employ vetted developers who understand the standards for "good" AI.

These professionals can scale feedback in real-time, ensuring that AI systems are fine-tuned to meet the required performance and quality.

Interested in a solution that combines talent sourcing with scalable AI training workflows? Discover more about our human data labeling service or hire expert AI developers from Latin America.

Conclusion

How to code AI? This question forms the foundation of understanding the complexities and stages of building an AI system.

From data collection to deployment, human expertise plays a pivotal role in ensuring that models are built and fine-tuned effectively.

By leveraging specialized knowledge and scalable AI training workflows, developers and reviewers can provide the necessary feedback to enhance model performance and reliability.

As AI continues to evolve, combining human input with advanced technology will remain crucial for creating accurate, ethical, and high-performance AI systems.